Optimizing Quality Assurance: Harnessing the Power of AI for Efficient and Effective Software Testing

|

Listen on the go!

|

In the present digital period, Artificial Intelligence (AI) is impacting the future of various aspects of Quality Assurance (QA). This evolution has resulted in strategies for ensuring quality is effectively integrated into development processes.

As per the latest stats, AI in Quality Assurance is anticipated to reach USD 4.0 billion by 2026, 44 % of firms have already integrated AI into their QA procedures, and 68% of experts believe AI will have the most significant impact on software testing in the future.

Traditional manual testing techniques are successful but can be laborious, expensive, and prone to human mistakes. AI-powered quality assurance is the application of AI techniques and tools to improve the efficiency and effectiveness of software testing.

This blog will detail AI techniques/models and tools to be implemented based on each QA objective to improve the efficiency and effectiveness of software testing, along with best practices and forecasted benefits.

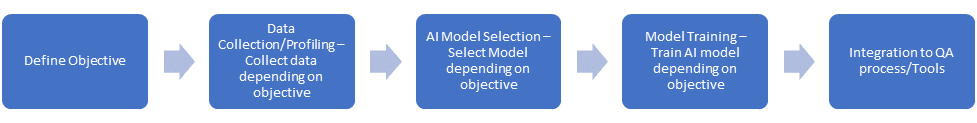

Generating an AI model

The most common steps to generate an AI model are to define the QA objective ->collect data -> select the AI model -> Train the model, -> integrate it into QA, as depicted below.

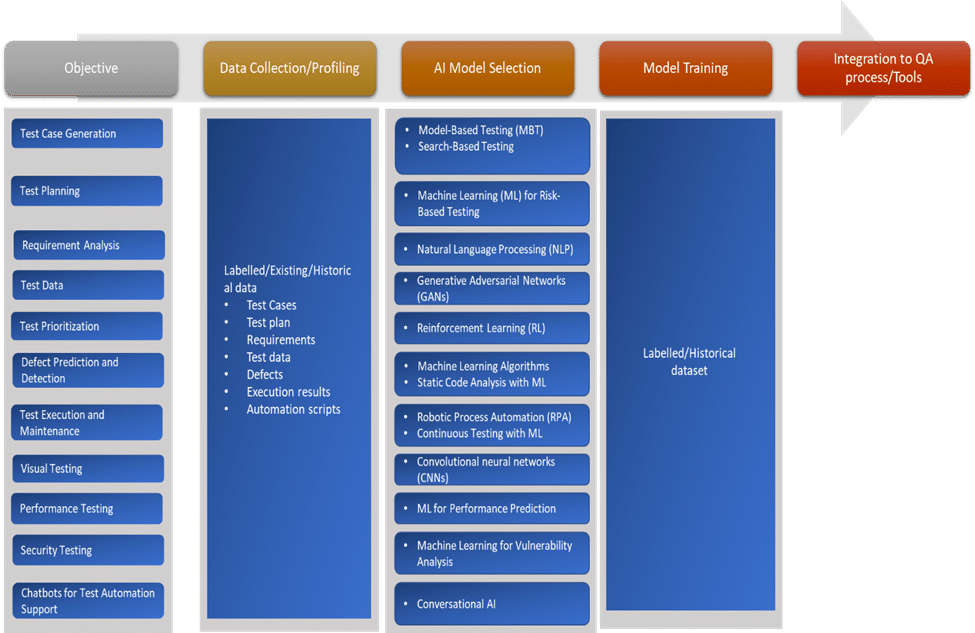

AI can help achieve various QA objectives, such as Test case generation, Test execution and maintenance, Defect Prediction and Detection, Test data, Test analytics, and reporting. AI model to be selected, trained, and integrated into the QA process/Tools is based on the QA objective to be achieved. Model selection as per the QA objective is as depicted below:

- Test Case Generation: Model-based testing (MBT) and search-based Testing models (tools like EvoSuite and Randoop ) are used to automatically generate test cases based on the analysis of code, requirements, or existing test cases, helping to achieve better test coverage and efficiency.

- Test Planning: Predictive analytics models like Machine Learning (ML) for Risk-Based Testing can assist in test planning by forecasting potential risks, testing efforts, and resource requirements, optimizing overall QA planning using predictive analytics tools for test planning

- Requirements Analysis: NLP techniques are used to analyze natural language requirements, facilitating the extraction of test scenarios and aiding in creating test cases.

- Test Data: GAN models assist in generating diverse and realistic test data, ensuring comprehensive test coverage and optimization of data-related QA activities using AI-driven test data generation tools.

- Test Case Prioritization: Reinforcement Learning models (ML-based prioritization algorithms) prioritize test cases based on code changes, historical failure data, and business impact, enabling more effective testing with limited resources.

- Defect Prediction and Detection: Statistical and machine learning models predict where defects are likely to occur in the code, assisting in focusing QA efforts on high-risk areas and improving defect detection.

- Test Execution and Maintenance: Test automation tools with AI capabilities execute test cases automatically and adapt to changes in the application, reducing the need for manual intervention in test script maintenance.

- Visual Testing: Computer vision (CNNs) is used for visual testing by analyzing and comparing the visual appearance of applications to identify layout issues, UI inconsistencies, or unexpected changes – tools like Applitools and Percy.

- Performance Testing: AI can simulate realistic user behavior and load patterns, assisting in performance testing scenarios using Load testing tools with AI capabilities to optimize application performance.

- Security Testing: AI models assist in identifying security vulnerabilities by analyzing code, patterns, and behaviors to detect potential threats, enhancing the security testing process using Security testing tools using AI for vulnerability scanning

- Chatbots for Test Automation Support: AI-driven chatbots aid testers by answering queries, guiding them through testing processes, facilitating team communication, and optimizing communication and collaboration in QA activities. Ex: Chatbots integrated with testing platforms.

By incorporating self-healing and interoperability capabilities in QA processes, organizations can enhance their testing procedures’ efficiency, reliability, and adaptability, contributing to the overall quality of software applications.

The forecasted critical benefits of applying these models to QA:

- 25-30% higher software quality

- 20-50% significant increase in test coverage

- 30-60% reduction in testing time, effort, and cost

Best Practices for Developing AI Models in Testing

Developing AI models for testing involves adhering to several best practices.

First and foremost, it’s essential to possess a solid grasp of the domain’s testing concepts and challenges. Additionally, utilizing high-quality, diverse, and representative data for training and testing is crucial for robust model performance. Regular updates and retraining of models are necessary to keep pace with evolving applications, environments, and requirements. Collaboration among testing experts, AI specialists, and domain experts facilitates comprehensive problem-solving.

Integrating human expertise is paramount, particularly for intricate decision-making processes, validating model outputs, and managing exceptions. Ethical considerations, such as biases and privacy concerns, must be carefully addressed when employing AI in testing scenarios. Transparency in AI model functionality is vital, especially in critical testing contexts. Training users on effectively interacting with AI-driven models, like chatbots, is essential for seamless integration.

Establishing user feedback mechanisms to assess AI model performance aids in continual improvement. Designing AI-driven testing solutions capable of scaling to accommodate the complexity and scale of systems being tested is imperative. Finally, ensuring compliance with relevant regulations and standards is essential to maintain integrity and reliability in AI-driven testing practices.

Conclusion

AI models aim to streamline and optimize various aspects of the QA process, ultimately contributing to delivering high-quality software products. The integration of AI in QA continues to evolve with advancements in technology and the specific needs of software development and testing teams. It is important to note that teams often combine multiple AI techniques to address the complexities of modern software applications and testing requirements. The success of AI in testing heavily depends on the quality and diversity of the training data. Continuous monitoring and feedback loops are essential for maintaining and improving the effectiveness of the AI models over time.

Cigniti has expertise and helped clients deliver high-quality software products using AI. iNSta is Cigniti’s intelligent scriptless test automation platform that enables building production-grade test automation suites and helps improve overall QA efficiency and effectiveness.

Need help? Schedule a discussion with our Quality Assurance and Artificial Intelligence experts to learn more about harnessing the power of AI for efficient and effective software testing.

Leave a Reply